When an organization of any size looks at the cloud, data migration becomes focal point of each discussion. Available data transfer options can help you to achieve your goal. In command line methodologies Azcopy is the best tool to migrate reasonable amount of data. You may prefer this tool if you have hundreds of GB data to migrate using sufficient bandwidth. You can use this tool to copy or move data between a file system and a storage account or between storage account. This tool can be deployed on both Windows and Linux systems. It is built on .Net framework for Windows and .Net core framework for Linux. It leverages windows style command-line for windows and POSIX style command-line for Linux.

Let me explain, how to do it step by step on windows system.

First, download the latest version of Azcopy tool for Windows.

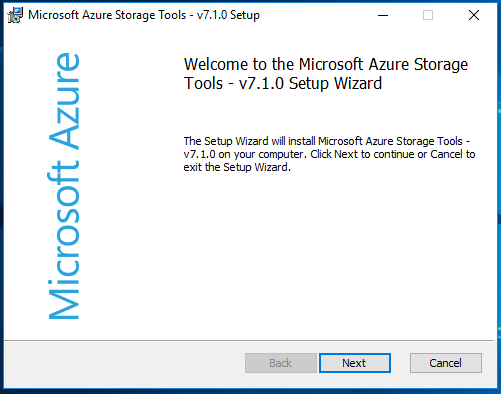

Once downloaded run the .msi file. Click on Next to continue installation.

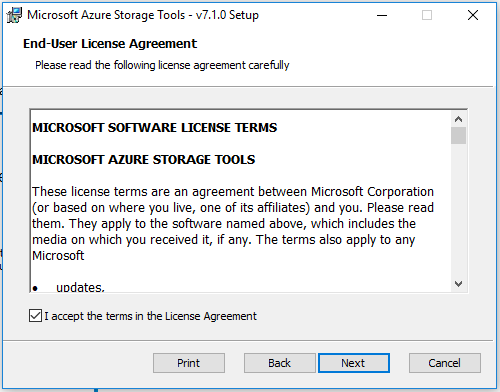

Accept the license agreement and click on Next.

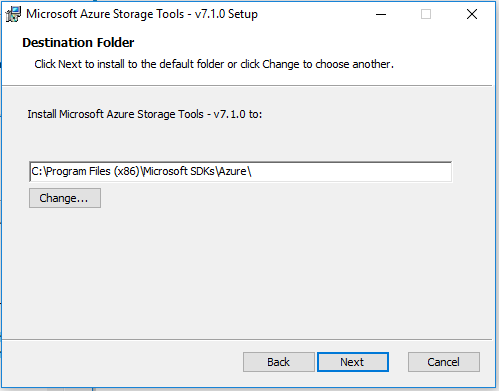

Define the destination folder and click on Next to continue.

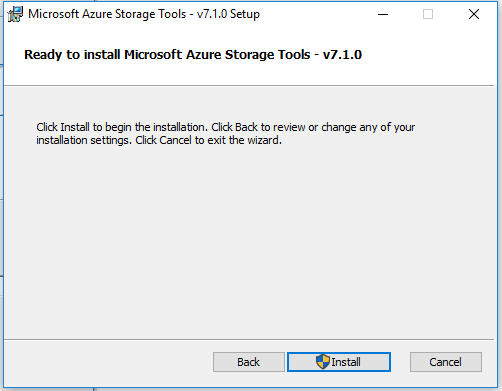

Click on Install to begin the installation.

Click on Finish once installation completed successfully to exit the installation wizard.

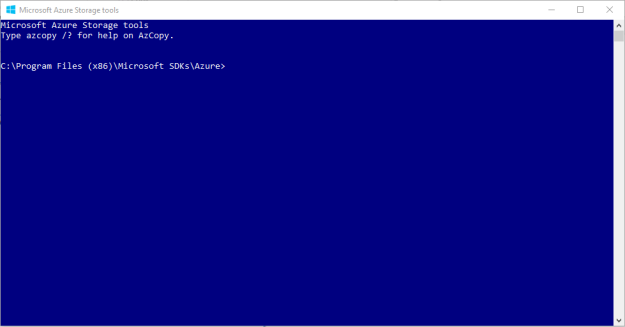

Open “Microsoft Azure Storage command line” tool from the programs.

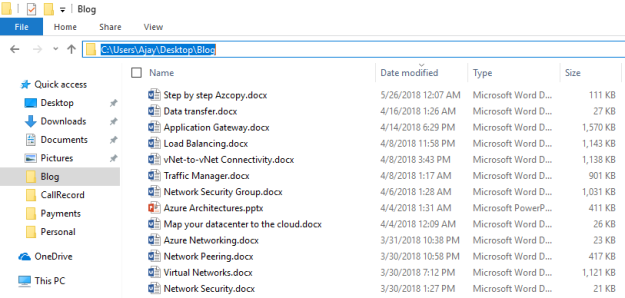

Now, look at the source and destination location and type. If I am copying data from internal filesystem to the cloud blob storage then local filesystem is my source and blob container in cloud storage account is going to be my destination.

Note down the location of source data.

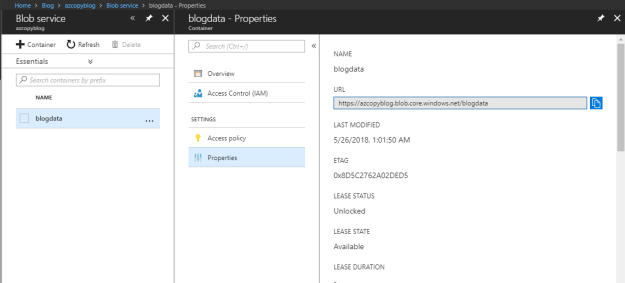

Copy the URL of your blob container.

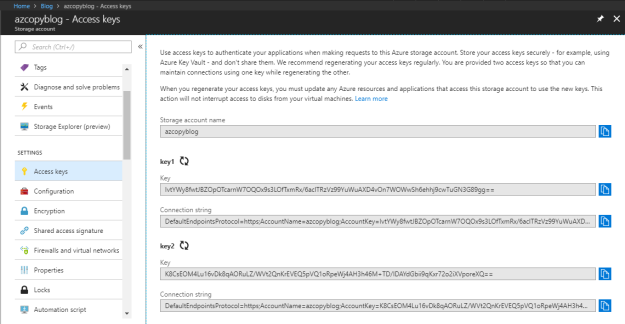

Copy the Access Key. You can find “Access keys” under setting in storage account.

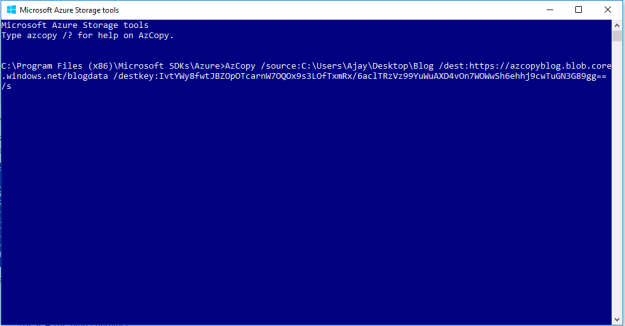

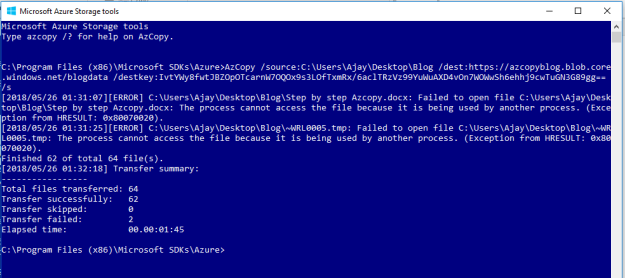

Run the Azcopy command in following syntax: Azcopy /source:<source path> /dest:<destination path> /destkey:<Access key of destination blob> /s

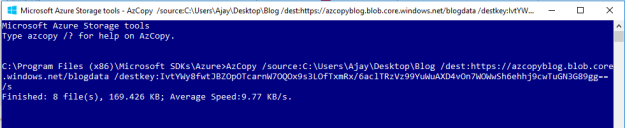

You can monitor the copy activity.

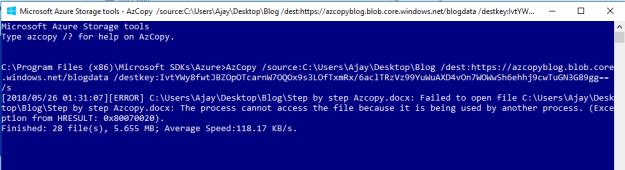

If any error occurs during copy operations, you can monitor that as well.

Note: In the example below, to simulate an error scenario, I had tried to copy all blog posts along with this blog post on that I was working on. Therefore, you can see the same error description.

Another error was .tmp file. This .tmp file error, we can ignore.

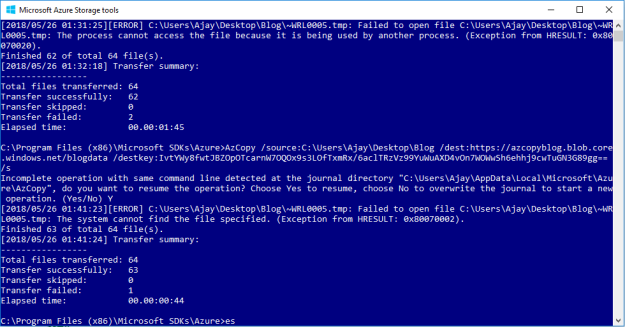

Now, let me explain you that “how to perform retry option”. Run the same command and “Incomplete operation with same command line…” prompt enter Y to retry the operation for failed data. As you can observe that the filed operation of in-use file has completed successfully. However, we can ignore the .tmp file error.

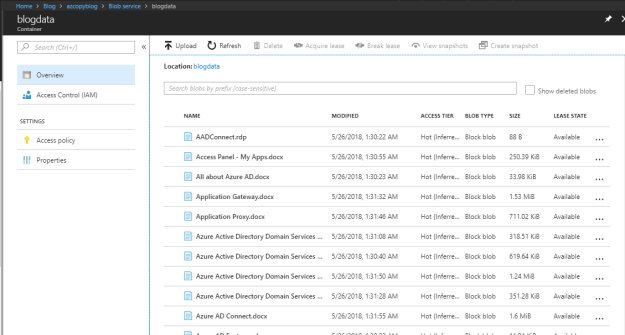

Once you have copied all the data, go to the blob container and verify the same.

If you have high bandwidth internet connection or express route, you can move large amount of data as well using Azcopy but it is more relevant option for xyz GB of data. Here xyz represents the numbers.