In Azure Storage Accounts blogpost, I have covered details of storage accounts, access tiers and performance tiers. Storage account is a kind of container for storage services in Microsoft Azure. There are following storage services provided by Microsoft Azure:

Blob storage

File storage

Queues storage

Table storage

Disk Storage

Let me explain you each storage service in detail.

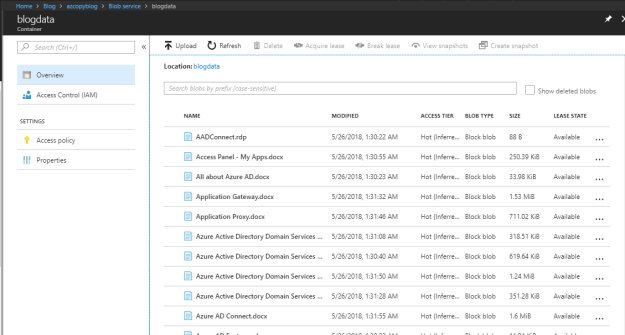

Blob storage: This name “blob” looks bit confusing to the people who are new in the world of storage. In simple words, a blob is a storage that can store almost any kind of file that you store in your PC, tablet, mobile and cloud drives. For example, MS office documents, HTML files, database, database log files, backup files and big data etc. Once stored, you can access it from anywhere in the world through URLs, REST API, and Azure SDK storage clients etc. There are three types of blobs, block blobs, page blobs and append blobs.

- Block blobs: It is an ideal for storing any kind of ordinary files such as text or media file. It supports files up to about 4.7 TB in size.

- Page blobs: It is kind of blob that is meant for random access and more efficient with frequent read/write operations. It supports files up to 8TB in size and used for OS and Data VHDs.

- Append blobs: It is as same as block blob and in other words it is made up of blocks like block blob but it provides an additional capability of appending the files. It is generally used in logging scenarios, where we store logging information from multiple sources and append it.

File storage: Azure file storage is highly available network file share based on the SMB 3.0 (Server Message Block) protocol also known as CIFS (Common Internet File System). Azure file shares can be accessed by Azure virtual machines and cloud services by mounting the share, while on premises deployments can access it through Rest APIs. One of the amazing capability that distinguish it from normal file share i.e. it can be access from anywhere through the URL that points to any file and includes SAS token. The way we use traditional file share, in the same way azure file share can be used. Let’s take few examples to make it clearer.

- File share to store data such as files, software, utilities, reports etc.

- Application that depends on file share

- Configuration files that need to be accessed by multiple sources at the same time.

- To store crash dump, metrics and diagnostic logs etc.

These are few examples but in your day-to-day life you find many more. As of now, AD based authentication and ACLs are not supported but in future you may see it as well.

Queue storage: This is not a new word for any experienced IT developer/professional. Azure Queue storage is a service to store and retrieve messages asynchronously. A queue can contain millions of messages, up to a capacity limit of the storage account and within a message size limit of 64 KB each. It can be accessed from anywhere in the world via authenticated calls using HTTP or HTTPS. The maximum time that a message can remain in the queue is 7 days. Few examples of queue storage services are:

- Passing a message from an Azure web role to an Azure worker role.

- Covert file types of large number of files such as .png to .jpeg by using azure function. Once you will start uploading the files, azure function will start converting its format.

Table storage: Azure table storage is a service that stores structured data. This service is NoSQL datastore that accepts authenticated calls from inside and outside the Azure cloud. It is ideal for storing structure and non-relational data such as spreadsheet kind of information, address books, user data for web applications etc. You can store millions of structured and non-relational entries, up to limit of the storage account. Few example of table storage service are: (Courtesy: Microsoft docs)

- Storing terabytes of structured data capable of serving web scale applications.

- Storing datasets that don’t require complex joins, foreign keys, or stored procedures and can be denormalized for fast access.

- Quickly querying data using a clustered index.

- Accessing data using the OData protocol and LINQ queries with WCF Data Service .NET Libraries

Disk Storage: Azure disk storage service is the simplest one to understand as we use it as part of the virtual machines either on-premises or in the cloud. Disk storage can be used for operating systems, application or any other kind of data. All virtual machines in Azure have at least two disks, a disk for operating system and a temporary disk. VMs can have one or more data disks. All disk will be in VHD format and can have capacity up to 1023 GB. Azure disk storage service provides these disks in two ways, a managed disk and an unmanaged disk. These disks can further divide between two performance tiers, standard and premium.

Managed disk: Managed disk are disks that is created and managed by Microsoft and you don’t have to worry about the availability of storage. Managed disks are available in both performance tier, based on our requirement you can select the right size of disk and performance tier. Standard performance is represented by S and premium performance tier is represented by P. The available size for both the performance tier is between 32 GB to 4095 GB.

Unmanaged disk: Unmanaged disks are disks that is created and managed by you. To create these disks, first you create storage account and define availability by selecting replication options and then you create unmanaged disks under it. Unmanaged disk also supports standard and premium tier. Here, you are responsible of availability of the disk based on replication method you select.

Standard tier: Standard tier disks are basically HDD and provide limited number of IOPS. This type of disks provides maximum 500 IOPS.

Premium tier: Premium tier disks are SSDs and provide high IOPS and low latency. This type of disks provides maximum 7500 IOPS. Premium disks are only available with limited series of Azure VMs.