Azure Import/Export service allows data transfer between Azure datacenters and customer locations. It is a secure service to send or receive medium-to-large amount of data when the bandwidth becomes bottleneck and costly. Azcopy is preferred tool for online data migration if you look Microsoft Azure data transfer options. While Azure Import/Export provides large amount of physical data transfer in secure and reliable manner. The data can be copied in one or more drives to import to and to export from Azure blob and file storages.

This Import/Export service use either 2.5-inch SSDs or 2.5/3.5-inch SATA II & III HDDs or mix of these. External HDD with built-in USB adapter and drives in external casing are not supported. Here is the quick snapshot of possible import and export data transfers.

| Job | Storage Accounts | Supported | Not Supported |

| Import | Classic

Blob Storage accounts General Purpose v1 storage accounts. |

Azure Blob storage.

Block/page blobs. Azure File storage. |

|

| Export | Classic

Blob Storage accounts General Purpose v1 storage accounts. |

Azure Blob storage.

Block, page and append blobs. |

Azure File storage. |

Points to remember while sending drives for import job.

- A maximum of 10 drives for each job.

- Use only single data volume partition.

- Data volume must be formatted with NTFS.

-

Supported external USB adaptors to copy data to internal HDDs.

- Anker 68UPSATAA-02BU

- Anker 68UPSHHDS-BU

- Startech SATADOCK22UE

- Orico 6628SUS3-C-BK (6628 Series)

- Thermaltake BlacX Hot-Swap SATA External Hard Drive Docking Station (USB 2.0 & eSATA)

Let me explain you use cases and process to perform import/export job.

You can use this service in following scenarios:

- Move data to the cloud as part of the data migration strategy.

- Data backup to the cloud.

- Data recovery from the cloud.

- Data distribution to the customer sites.

Here is the high-level process and components and locations available for Import/Export job.

Components:

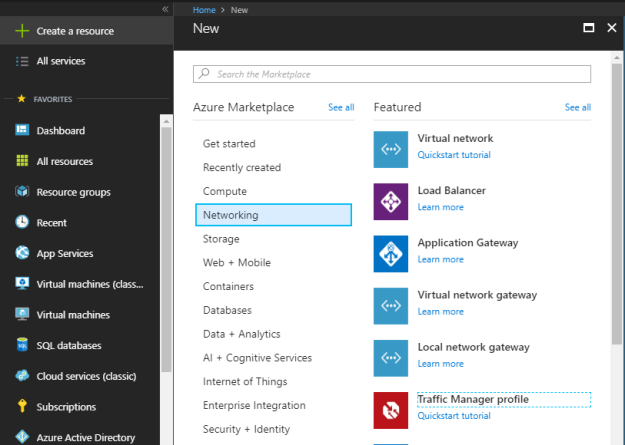

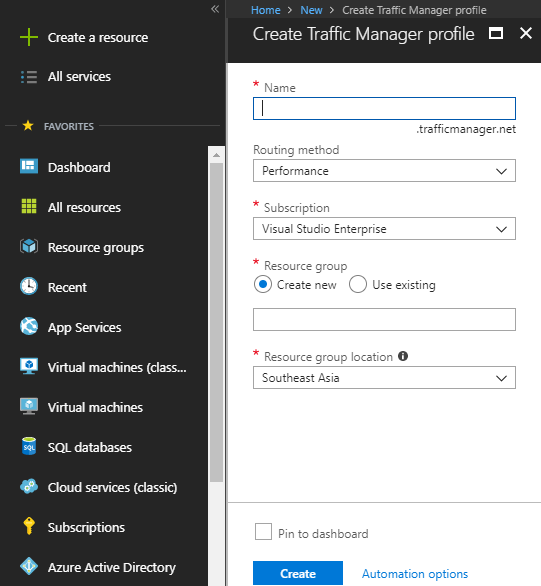

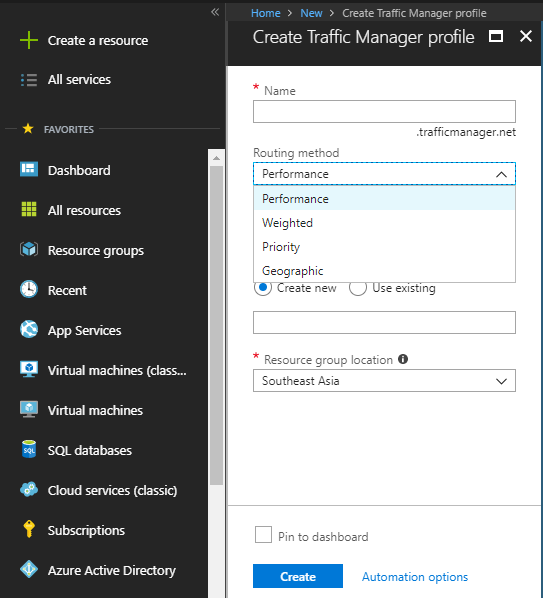

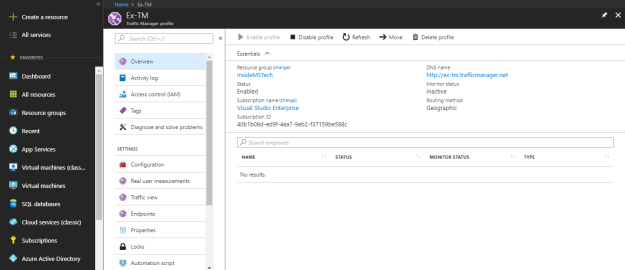

- Import/Export service in Azure portal to create a new job

- Hard disk drives to copy the data

- WAImportExport tool to prepare drives and encrypt data

Location available on the date of writing this blog post:

| Country | Country | Country | Country |

| East US | North Europe | Central India | US Gov Iowa |

| West US | West Europe | South India | US DoD East |

| East US 2 | East Asia | West India | US DoD Central |

| West US 2 | Southeast Asia | Canada Central | China East |

| Central US | Australia East | Canada East | China North |

| North Central US | Australia Southeast | Brazil South | UK South |

| South Central US | Japan West | Korea Central | Germany Central |

| West Central US | Japan East | US Gov Virginia | Germany Northeast |

Courtesy: Microsoft

If your Azure storage account location is not available in the above list, you can create a job and send it to the alternate location as specified in the tool while creating an Import job.

Next blogpost covers, step by step process of Azure Import/Export service/job.